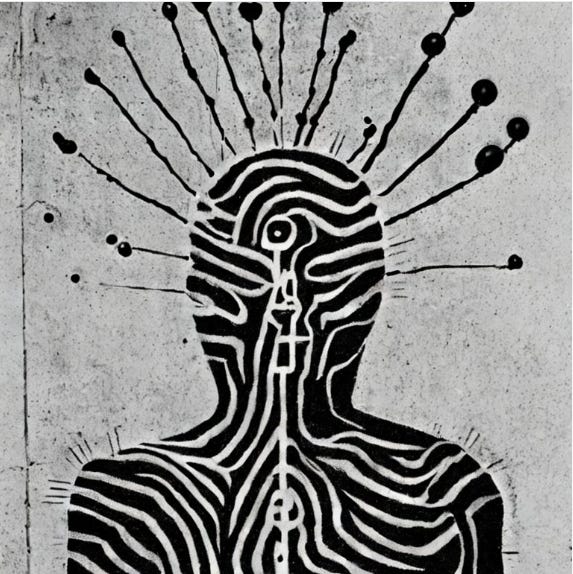

The Eye No Longer Testifies

Deepfakes, Fakes, and Fake-Outs

Last night I initiated a “conversation” with Perplexity.AI about the rising onslaught of AI-generated videos that have swamped the internet. I voiced a few key thoughts and observations to get things started—we went back and forth a few times—and eventually arrived at this:

The Great Dissolve:

Notes on the era of synthetic vision

We are witnessing not simply the rise of fake AI videos but the decay of visual literacy itself. For centuries, the moving image served as the most persuasive document of reality; now, it has inverted into its opposite—a persuasive simulation of whatever anyone wishes reality to be. The acceleration is staggering: in 2023, tools like Runway and Pika could produce short, uncanny clips; by 2025, open‑weight diffusion models convert a single prompt or selfie into minutes of “documentary” footage. Synthetic cinema has become a social medium.

This shift dissolves not only public truth but also private perception. A teenager can now fabricate a celebrity scandal indistinguishable from TMZ; more chillingly, a jilted lover can manufacture an “intimate” video featuring an ex. In several high‑profile American and Korean cases, students’ faces were pasted into pornographic scenes using free online tools, producing emotional damage without any physical encounter. The synthetic and the traumatic have converged.

Politically, synthetic reality already eclipses propaganda’s old logic. In a 2024 Mexican gubernatorial race, manipulated AI videos circulated faster than campaign ads, outpacing attempts at correction. The next phase is ambient misinformation—an ecosystem where falsehood requires no author, merely algorithmic drift. When everyone can make and circulate plausible video, no one can be held accountable for what it shows. Moral responsibility atomizes.

The consequence is the ontological vertigo that the initial essay calls “information paralysis.” The paralysis arises because every image now whispers: maybe not. People begin to doubt even verified footage of war crimes or natural disasters, assuming that slick unreality hides beneath every frame. The observer becomes a permanent skeptic, yet this skepticism—though born of vigilance—breeds apathy. In a world where nothing can be believed, nothing must be acted upon.

Still, this isn’t merely decay—it’s also a creative mutation. Artists and documentarians are already experimenting with synthetic verisimilitude as a critical language, using AI‑generated footage to expose precisely the mechanics of deception. The crisis might ultimately restore value to eyewitness testimony, embodied presence, and local trust networks. But those will have to be reinvented. Until then, every screen contains both the world and its hallucination, flickering at identical resolution.